Technology underpins every business these days. As more emerging technologies—such as RPA, machine learning, and AI—become prevalent in operations, so do the accompanying risks and legal and ethical hurdles that companies must clear.

The companies that can best generate value with AI and manage associated risks will lead the pack. According to McKinsey analysts, organizations must put business-minded legal and risk-management teams alongside the data science team at the center of the AI development process (waiting until after the development of AI models is inefficient and time-consuming).

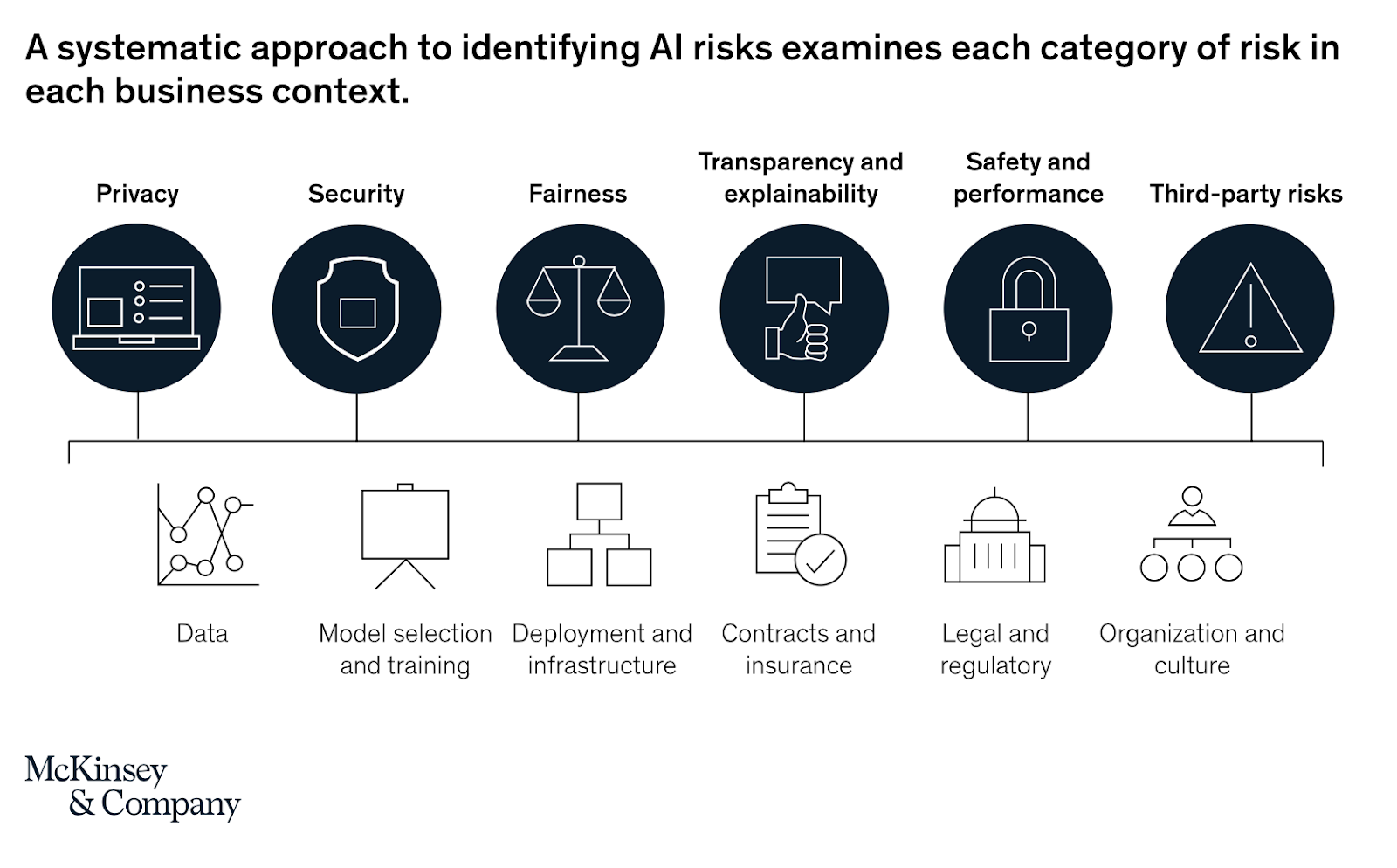

In summary, these are the six major AI risk categories identified by McKinsey:

- Privacy – Data is essential to the AI model; however, organizations must be aware of how data can be collected and used. Violating consumer trust leads to reputation risk and a decrease in loyalty.

- Security – New AI models have complex, evolving vulnerabilities that create new and familiar risks.

- Fairness – Beware inadvertently encoding bias in AI models that could be harmful to particular classes and groups.

- Transparency and explainability – Be transparent about how the AI model was developed to stay on the up-and-up with legal mandates and customer trust.

- Safety and performance – Proper testing ensures that AI applications operate safely and securely.

- Third-party risks – Know and understand the risk-mitigation and governance standards applied by each third party; should independently test and audit all high-stakes inputs.

These risk categories can and should guide where mitigation measures are directed.

The next step after defining and assessing the catalog for the most prevalent risks is to develop a sequence of mitigation for each one. A strong sequencing methodology enables AI practitioners and legal personnel to deal with AI use cases so that resources are efficient and focused, say McKinsey analysts.

“Due diligence data, such as background checks, credit reports, questionnaires, and annual assessments, can be aggregated and reviewed to perform trending analysis and calculate risk scores,” a recent Anti-Fraud Collaboration (AFC) report notes. “Third parties can be compared to one another to identify patterns, relationships, and anomalies.”

An informed AI risk-prioritization and management approach keeps organizations one step out of front of pending threats, ensures legal guidance, and reinforces technical best practices.